In these past few months I've been getting myself more and more into the rabbit hole of optimization. I'm discovering that the act of writing small programs that automate processes which are repetitive and boring is a very fun thing to do. I guess it's good that I enjoy doing such things, since when it comes down to it, the main purpose of all computational technology is automation, and that's what I will probably be doing at whatever work I'll choose to do.

Since developing this habit of wanting to automate everything, I started to look more and more for things which could be automated in my life. I was not surprised when I found out that there were so many things that could be improved upon, each of which came with something new to learn. Learning in this way is both fun and rewarding, because your learning has a clear objective: improve this thing; do it better than it is already being done; provide a new service for everyone to use. Even the smallest thing can make you feel like you've created something cool, which pushes you to do more and more.

This blog post is about one such challenge I proposed to myself. The problem? Well, it is about the news-board of the official site of my university course.

I'm currently enrolled in a Computer Science program (master's degree) at Tor Vergata, a university of Rome, and the official site for my degree can be found at http://www.informatica.uniroma2.it/. I have various issues with how the site is setup and managed (why no SSL/TLS?), too many for a single blog post. Without ranting too much I'll get straight to the point: the way news and announcements are managed, in my opinion, is just plain horrible. Having said that, I don't like to express my disappointment about something only to do nothing about it, and so I'll now try to argue where are the flaws, and then I'll offer a solution which, in my opinion, works better and, actually, should've been already implemented. We'll see how the solution makes use of RSS, an amazingly simple but powerful technology which everyone should at least know about. So, let's get started.

#The Problem

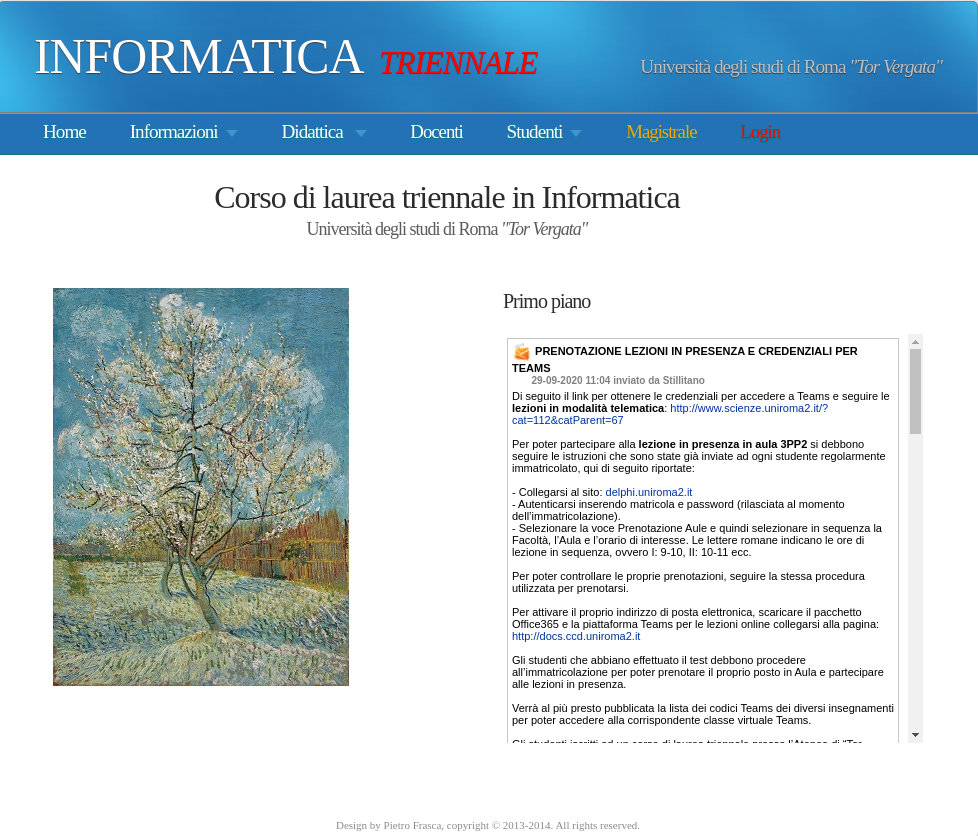

When you go to the official site of my course you are presented with this view

What interests us is not Van Gogh's painting, The Pink Beach Tree,

a nice reference to the fact that Computer Science heavily uses

tree-like data structures (although the trees that we use are

rotated such that the root of the tree is at the top and the leaves

are at the bottom), but rather the news section, the part that

stands below the text "Primo piano".

There are mainly two issues with the way these news are managed, and these are:

-

Only the most "recent" news are shown, the one posted within a certain timeframe. As more and more news get added, the old ones are not shown anymore.

-

There is no mechanism that notifies the students when news are posted.

While the second issue is one of efficiency, i.e. it forces the student to manually check the site everytime to know if something new has been posted, the first one is actually quite problematic, because in these news important informations might be found. I find it quite confusing to grapple with the fact that someone thinks this is a decent way to manage news and announcements.

Just the other day a colleague of mine, knowing about the solution I will shortly be discussing, asked me if I had saved an announcement that was posted on the 27 of April, 2020, detailing the guidelines to follow for graduating in this COVID-19 world, and that was later removed as other news got added in the news-board. I think its unacceptable that something like this can happen.

So, this is it, as far as the problem goes. Before introducing my solution I will now talk in general about an amazing technology called RSS.

#RSS

RSS stands for Really Simple Syndication, and it is a format used by content producers on the web to notify users when new content is created and is available to be read and or seen, depending on the nature of the content. It is essentially a notification mechanism, comparable to the one you have in your smartphone, but for web content instead of messages and general apps notification.

The way RSS works is quite simple and can be described as follows:

-

The Web creator, say a blogger, writes and posts a new blog post in his site. To actualy notify all the users, he writes some metadata about his new post inside a particular

.xmlfile, called anrss feed. -

Each user can choose to "subscribe" to the rss feed of the blogger, and when new blog posts are posted online, each user can use an

rss clientwhich automatically checks all rss feeds the user has subscribed to in order to understand if something new has been posted and tell the user accordingly.

With this RSS-based workflow the user no longer has to go to the blogger site everytime to check if something new has been posted: he or she is immediately notified by the rss client when that happens. In other words, this mechanism makes more efficient the act of consuming content on the internet.

Another advantage of using RSS is that, being extremely simple, it doesn't do anything fancy with your feed of content. It doesn't follow the same trends of all the big companies such as Instagram, Facebook, Youtube, Twitter and so on. It doesn't deploy AI techniques to make your feed more "interesting", more "personal" and more "useful" (and also more confusing, definitely more confusing). It just gets you what you want, how you want it, as soon as its ready. Simple and efficient, what more could you ask for?

The syntax used by the .xml file that specifies the RSS feed is

quite simple. To give an example I will show a few entries in the

RSS feed of this very blog, which is available at the following

link.

The general structure is as follows: in the first portion of the file there are a bunch of info regarding the particular channel, which represents the source of the content. These are formatted as follows

<rss xmlns:atom="http://www.w3.org/2005/Atom" version="2.0">

<channel>

<title>Leonardo Tamiano's Cyberspace</title>

<link>https://leonardotamiano.xyz/</link>

<description>

Recent content on Leonardo Tamiano's Cyberspace

</description>

<generator>Hugo -- gohugo.io</generator>

<language>en-us</language>

<lastBuildDate>Thu, 03 Sep 2020 00:00:00 +0200</lastBuildDate>

<atom:link href="https://leonardotamiano.xyz/index.xml"

rel="self"

type="application/rss+xml"/>

<!-- Items representing content updates go here -->

</channel>

</rss>

As we can see the channel contains basic informations such as the title of the blog, the link, a general description, and so on.

Then, inside the channel attribute, there are the items, each of which represents a single piece of content that is to be consumed by clients. The items have the following syntax

<item>

<title>How to manage e-mails in Emacs with Mu4e</title>

<link>https://leonardotamiano.xyz/posts/mu4e-setup/</link>

<pubDate>Tue, 28 Jul 2020 00:00:00 +0200</pubDate>

<guid>https://leonardotamiano.xyz/posts/mu4e-setup/</guid>

<description>In the past months I’ve spent quite a bunch of

time trying to optimize the way I interact with technology. One of

the most important changes I made in my personal workflow is the way

I manage e-mails. Before this change I always used the browser as my

go-to email client. I did not like this solution for various

reasons, such as: browsers are slow; basic text editing was a pain,

especially considering I’m very comfortable with Emacs default

keybinds (yes, I may be crazy);</description>

</item>

In here we find once again basic information, but this time regarding single blog posts, or in general single pieces of content.

The site https://validator.w3.org/feed/docs/rss2.html contains the

specification for RSS 2.0, which includes all the possible tags

that can be put in the RSS feed, each of which with its own

meaning and how it ought to be used.

#My Solution

Having been aware of the simplicity of RSS, when I thought about optimizing the news-board for my degree course I immediately thought about using it. The idea I had is as basic as it gets in computer science: scrape the data from the official site, which is badly managed, and wrap it with xml tags to form a well-mainted rss feed. If I managed to do this I could solve both problems at the same time: I could be notified when something new was posted, and I had no worries that some news would be deleted, since I stored all the news in my rss feed without deleting the old ones (because, once again, why the hell would you delete them?).

To actually code the solution I choose, of course, python3, and

after a full morning of work I managed to do it. The final result

of the process are two RSS feed, which are available on this site

itself at the following URLs

That first feed contains the news posted on the news-board on the bachelor portion of the site, while the other contains the news posted on the master portion of the site.

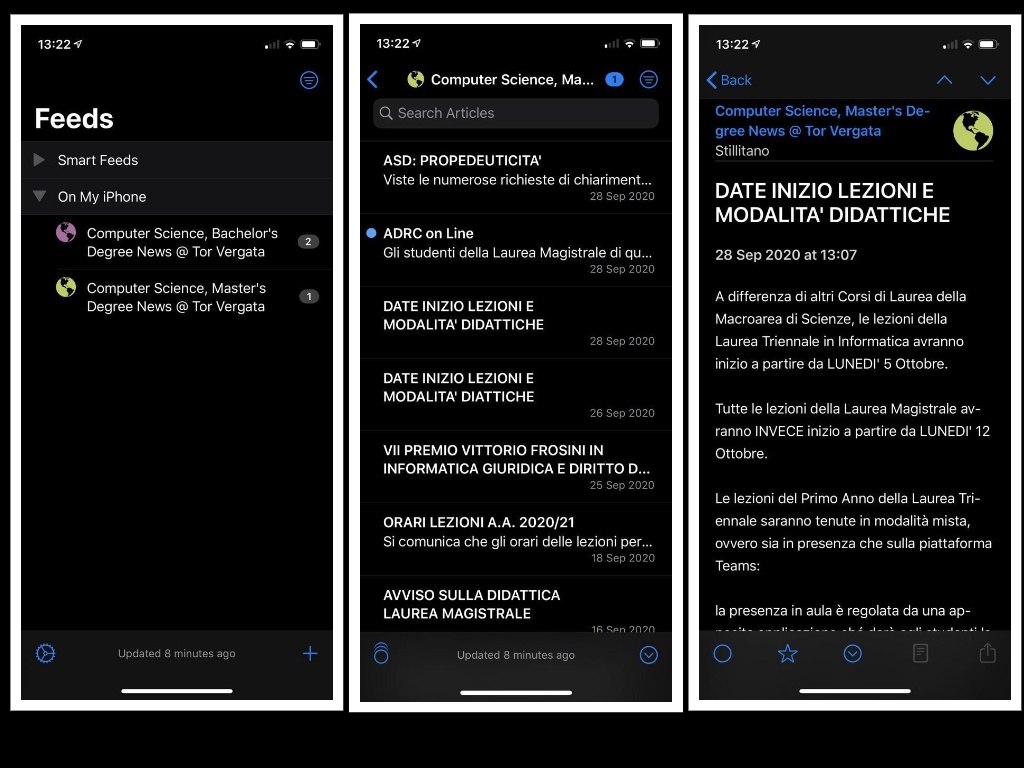

Both of these feeds can be used with any RSS-clients by simply

using the URL to subscribe to the RSS feed. I, for example, use

Emacs as an RSS feed when I'm at home, using my Desktop, or

outside using my laptopt. I've already written a bunch of articles

detailing the way I use Emacs, so go look at that if you are

interested. When I'm instead using my iPhone I use another RSS

reader, called NetNewsWire. There's nothing special about it, but

its simple and easy to use (although I've not yet managed to get

attachments to download with this)

This final part of the blog post is not necessary to read, since in it I will describe in more detail how I coded the solution. So, as a final note (for those not interested in the final section), I hope it was an interesting read, and made you realize just a bit the power given by the simplicity of RSS!

The solution I found can be broken down into 4 functions, which I will briefly describe. Before starting though I will admit that the following code is probably not the most beautiful, but it does the job its supposed to do, so for now I'm satisfied.

As far as libraries goes, I used the following

import time # to get current date

import schedule # to schedule execution

import datetime # to parse data

import requests # to do GET/POST HTTP requests

from bs4 import BeautifulSoup # to scrape HTML

The four functions I mentioned are the following ones

-

update_rss(rss_file): This is the main function which downloads the HTML page containting all the news and tries to add each news to the RSS feed as a new entry.

def update_rss(): r = requests.get(BACHELOR_URL) soup = BeautifulSoup(r.text, 'html.parser') entries = soup.find_all("table") for i in range(0, len(entries)): add_rss_entry(BACHELOR_RSS_FILE, entries[len(entries) - i - 1]) # ---------------------- r = requests.get(MASTER_URL) soup = BeautifulSoup(r.text, 'html.parser') entries = soup.find_all("table") for i in range(0, len(entries)): add_rss_entry(MASTER_RSS_FILE, entries[len(entries) - i - 1]) -

add_rss_entry(filename, entry): This function generates the RSS entry for a particular announcement/news, and only adds it if its "new", i.e. if it has not been already added.

def add_rss_entry(filename, entry): rss_entry = generate_rss_entry(entry) rss_file = open(filename, 'r', encoding='utf-8') contents = rss_file.readlines() rss_file.close() # NOTE: only write if entry is not already present if "".join(contents).find(rss_entry) == -1: contents.insert(8, rss_entry) rss_file = open(filename, 'w', encoding='utf-8') rss_file.writelines(contents) rss_file.close() -

generate_rss_entry(entry): This function creates a new RSS entry using the data extracted from the HTML markup that contains a single announcement/news

def generate_rss_entry(entry): title, date, author, description, enclosure = extract_data(entry) rss_entry = ( "<item>\n" f" <title>{title}</title>\n" f" <author>{author}</author>\n" f" <pubDate>{date}</pubDate>\n" f' <enclosure url="{enclosure}" type="application/pdf" />\n' f" <description>\n<![CDATA[{description}\n]]></description>\n" "</item>\n\n") return rss_entry -

extract_data(entry): Finally, this function is the one that deals with the scraping of the data from the website. This is the most critical function, as it relies on the the particular way the data is structured. I have to say that I was lucky that the basic structure found in every post is the same, otherwise this work would've been much, much harder.

def extract_data(entry): header = str(entry.th) # get title title = header[header.find("\"/>")+4:header.find("<br/>")] # get date date = header[header.find("gray;\"> ") + len("gray;\"> "):header.find("inviato")].split(" ") dmy = date[0].split("-") hm = date[1].split(":") day, month, year = int(dmy[0]), int(dmy[1]), int(dmy[2]) hour, minute = int(hm[0]), int(hm[1]) date = datetime.datetime(year, month, day, hour, minute).strftime("%a, %d %b %Y %H:%M:%S %z") # get author author = header[header.find("inviato da ") + len("inviato da "): header.find("</span></th>")] # get description description = entry.select_one("tr:nth-of-type(2)").text.replace("\n", "<br />") enclosure = "" # -- deal with links links = entry.select_one("tr:nth-of-type(2)").find_all("a") for link in links: # in case of remote urls <a href="..."> text </a> if "http" in link.get("href"): description = description.replace(link.text, link.get("href")) else: enclosure = BASE_URL + link.get("href") return title, date, author, description, enclosure

To finish off, the main loop schedules the execution of the update_rss() function every morning at 08:00 A.M. so that each day the feeds gets updated with the most recent announcements.

if __name__ == "__main__":

schedule.every().day.at("08:00").do(update_rss)

while True:

schedule.run_pending()

time.sleep(1)